AI Has a Memory Problem - and It's Not the One You Think

Tommi Hippeläinen

January 31, 2026

The first time an AI agent makes a mistake, we shrug.

The second time, we tune the prompt.

The third time, we add memory.

And that's where most teams stop thinking.

They add a longer context window. Or a vector database. Or a clever retrieval layer. Suddenly the agent "remembers" past conversations, past documents, past preferences. It feels smarter. More human. More reliable.

Until the day it does something that actually matters.

A discount gets approved that shouldn't have.

Access is granted when policy says no.

A deployment bypasses safeguards because "it looked similar to last time."

A regulator asks a simple question: Why was this allowed?

And nobody has a good answer.

Because what we've been calling "memory" in AI systems has been solving the wrong problem.

The Memory We Gave AI First

Short-term memory was the obvious place to start.

It mirrors how humans think while working through a task. A conversation has flow. A chain of reasoning needs continuity. An agent solving a multi-step problem needs to remember what it just did.

So we gave AI working memory. Context windows. Conversation history. Tool call state. Scratchpads.

It works beautifully - until it doesn't.

Short-term memory is fragile by design. It disappears when the session ends. It gets truncated when it grows too large. It's optimized for thinking, not for remembering responsibly.

When short-term memory is wrong, the cost is usually low. You retry. You regenerate. You shrug and move on.

That's fine - as long as the stakes are low.

Then We Gave AI a Past

Long-term memory came next.

This felt like a breakthrough. Instead of just thinking in the moment, the agent could now recall. It could search past conversations, documents, tickets, and notes. It could personalize. It could adapt.

Vector databases exploded in popularity because they solved a real pain: context doesn't fit in a prompt.

Now the agent could remember that a customer prefers short answers. That a similar issue happened last quarter. That a document somewhere probably contains the answer.

Long-term memory made agents feel experienced.

But it also quietly changed the nature of trust.

Long-term memory is fuzzy. It's approximate. It's optimized for similarity, not truth. It doesn't care when something was true, only that it sounds close enough.

That's fine for recall. It's dangerous for decisions.

Because when an agent acts, it doesn't just retrieve information. It commits the organization to an outcome.

And that's where the third kind of memory becomes unavoidable.

The Memory Nobody Designed For

Imagine a finance agent proposes a renewal discount above policy.

It pulls data from the CRM. It checks usage. It recalls that "last time something similar happened, finance approved it."

The discount is applied.

Weeks later, someone asks: Why was this allowed?

Not what data the agent saw.

Not what it remembered.

Not what it reasoned about.

Why was it allowed?

There is no vector search that can answer that question.

Because the answer isn't knowledge. It's a commitment.

This is the moment where AI systems collide with reality.

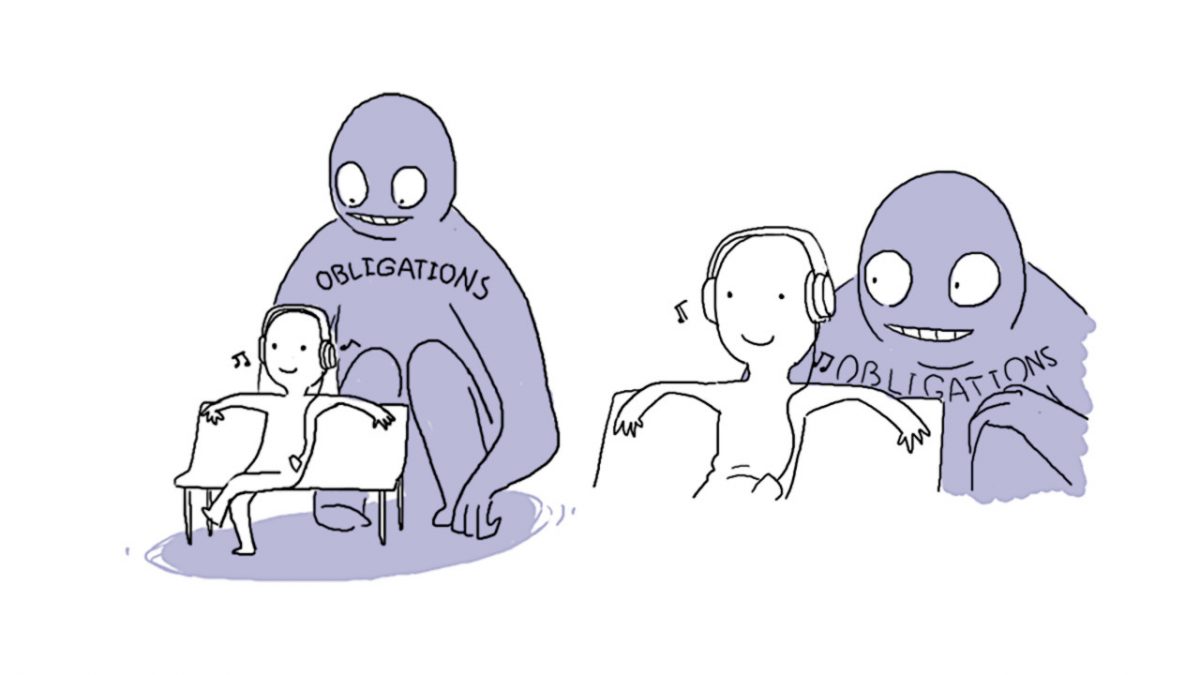

Organizations don't run on memory alone. They run on decisions - explicit, constrained, accountable decisions that affect customers, money, access, and risk.

And decisions create obligations.

Decision Memory Is Not "Smarter Memory"

Decision memory is not a better embedding strategy.

It's not chain-of-thought storage.

It's not learning from past outputs.

Decision memory exists for a different reason entirely.

Decision memory answers a different class of question:

What was decided?

Under which policy?

By whom?

With which approvals?

And what was the outcome?

This memory doesn't belong to the agent. It belongs to the organization.

It doesn't fade. It doesn't approximate. It doesn't optimize for relevance. It records commitments exactly as they happened, anchored in time and governance.

When short-term memory fails, you retry.

When long-term memory drifts, you recalibrate.

When decision memory is missing, you are exposed.

That difference matters more than most teams realize.

Why Developers Should Care (Even If They Don't Yet)

For a long time, developers didn't need to think about this.

If an application made a bad decision, a human could usually explain it away. Or undo it. Or absorb the cost quietly.

AI changes that math.

Agents act faster, more often, and across more systems than humans ever did. They don't just assist - they execute. And every execution creates a trail of consequences.

At some point, every developer building agents runs into the same wall:

"I can make the agent smarter, but I can't explain why it did that."

Decision memory is what turns that wall into an interface.

It allows developers to build agents that are not just capable, but defensible. Systems that can say, with confidence, this wasn't a hallucination - it was an approved exception under policy X, signed off by Y, using data Z.

That's not just compliance. That's engineering maturity.

Why Organizations Inevitably Demand It

Organizations already understand decision memory - they just didn't call it that.

Contracts. Approvals. Audit logs. Change records. Exception reports. These exist because organizations know that actions without accountability don't scale.

AI doesn't remove that need. It amplifies it.

When an agent makes ten decisions a day, nobody cares.

When it makes ten thousand, everyone does.

At that point, decision memory stops being a nice-to-have and becomes infrastructure.

Not because regulators demand it.

Not because auditors ask for it.

But because without it, trust collapses.

And without trust, autonomy stalls.

The Shift That's Already Underway

The industry has spent years arguing about how to give AI better memory.

Bigger context windows.

Better retrieval.

Richer embeddings.

Those matter - but they don't solve the hardest problem.

The hardest problem is not remembering more.

It's remembering what the system committed to.

That's the memory organizations actually care about.

Short-term memory helps agents think.

Long-term memory helps agents adapt.

Decision memory helps organizations trust.

And once you see that distinction, you can't unsee it.

The Inevitable Conclusion

As AI systems move from suggestion to execution, decision memory stops being optional.

Not as a feature.

Not as a prompt trick.

But as a first-class layer in the stack.

Because intelligence without accountability doesn't scale.

And memory without commitment isn't enough.

The future of AI isn't just smarter agents.

It's agents whose decisions can stand on their own.