From Punch Cards to AI

Tommi Hippeläinen

December 11, 2024

Tradeoffs Between Abstraction and Control

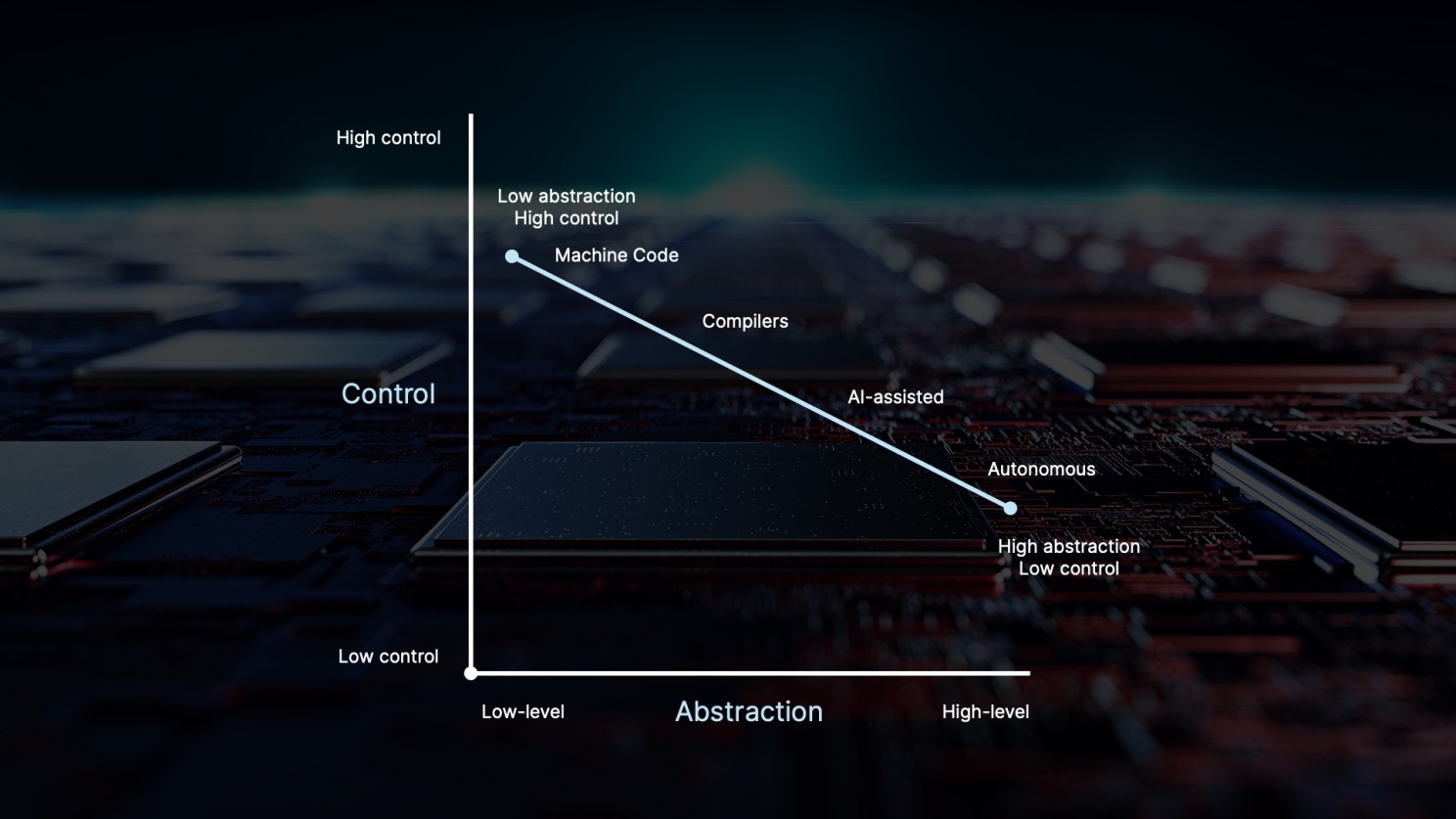

Throughout the history of software development, the evolution of programming languages and methodologies has steadily raised the level of abstraction. Each new breakthrough offered developers more convenience, shielding them from low-level details and allowing them to focus on the logic and structure of their applications. However, this shift toward higher abstractions has always come with a tradeoff: as the level of abstraction rises, fine-grained control over hardware resources and performance details decreases. While this tradeoff is acceptable and even desirable for many applications, there remain scenarios where control and optimization cannot be sacrificed.

A Historical Perspective on Abstractions

The earliest days of computing involved programming by manually setting switches, manipulating patch panels, or entering instructions through punch cards. Developers had absolute control over the machine’s behavior, carefully choosing instructions and memory addresses. This control came at a cost: every detail had to be painstakingly managed, limiting productivity and making even small changes labor-intensive.

The introduction of assembly languages provided a thin layer of abstraction. Mnemonic codes replaced raw binary instructions, making code more readable and slightly easier to manage. Still, developers dealt directly with registers and memory addresses, ensuring a high degree of control at the instruction level. The tradeoff was minimal: assembly offered only symbolic convenience, not conceptual leaps.

High-level languages like FORTRAN and COBOL changed the game in the late 1950s and 1960s. Compilers transformed human-readable code into optimized machine instructions, freeing developers from managing hardware details. This greatly improved productivity and lowered the barrier to entry for programming. At the same time, it reduced granular control. For many problem domains—scientific computations, business data processing—this was not only acceptable but highly beneficial.

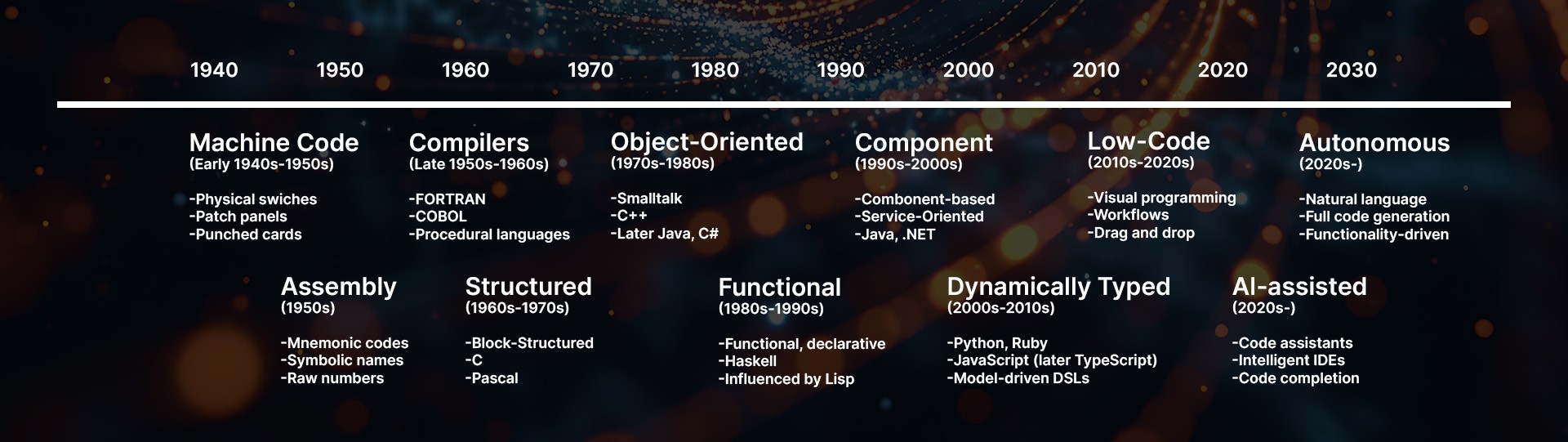

Chronological timeline of shifts in software development

Chronological timeline of shifts in software development

Over time, structured programming, object-oriented paradigms, functional and declarative languages, and higher-level frameworks and runtimes further abstracted away the underlying hardware. They introduced conceptual models that mirrored how developers think about problems. Domain-specific languages (DSLs) and model-driven development focused on expressing logic in terms of the business or application domain. Low-code and no-code platforms allowed solutions to be assembled visually, with minimal explicit coding.

Each of these steps improved convenience and productivity, but also distanced developers from the control they once had. Memory management, specific CPU instructions, and fine-tuned data placement became the domain of the compiler, the runtime, or the platform framework.

Abstraction versus control

Abstraction versus control

When Control Still Matters

Not all applications benefit equally from higher abstractions. Consider high-performance game engines, where precise timing, memory layout, and CPU/GPU optimizations can mean the difference between smooth gameplay and stuttering frames. Consider embedded systems in critical environments, where limited memory and processor speed demand careful low-level optimization. In database engines or disk storage systems, placing data optimally on specific blocks can yield major performance gains. In these scenarios, higher-level abstractions may impede the developer’s ability to squeeze out every ounce of performance or guarantee exact system behavior.

For such work, lower-level languages like C or even assembly remain relevant. The developer’s deep involvement with hardware-oriented details ensures that no cycle is wasted and no critical optimization is left on the table. The tradeoff here is between convenience and raw, uncompromising control. High-level languages will never exceed the control you can achieve at the low-level, since abstraction inherently means someone or something else (a compiler, a runtime) is making decisions on your behalf.

Evolving Bug Patterns at Different Abstraction Levels

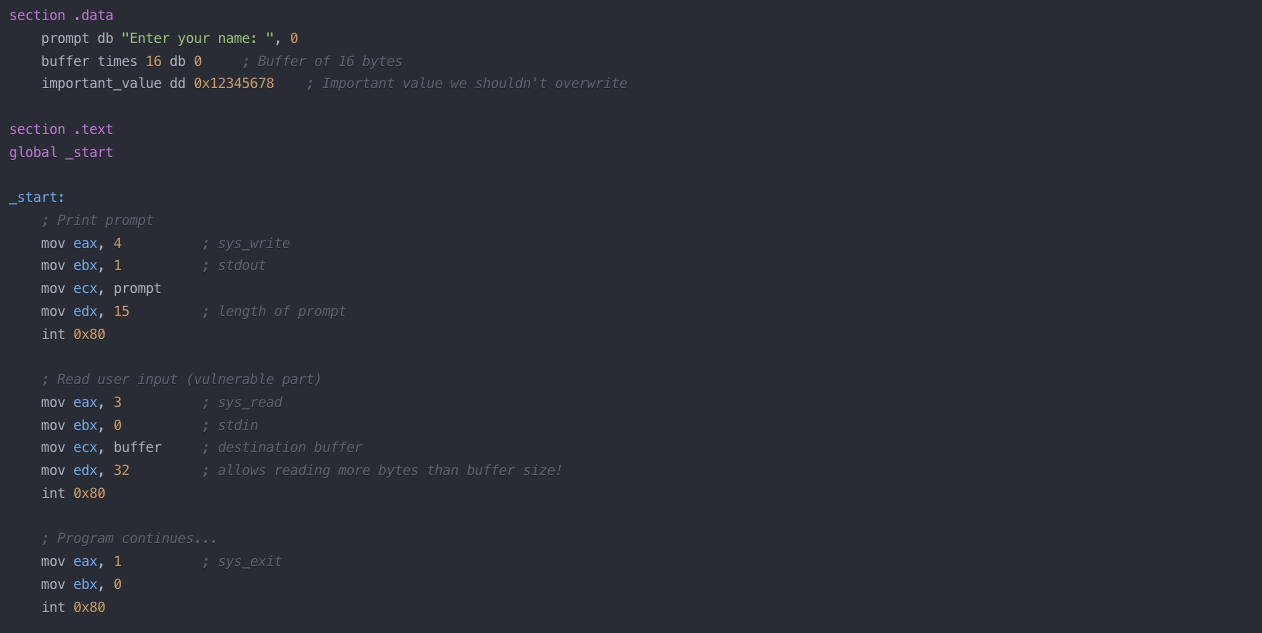

Abstractions not only impact performance and productivity, but also the types of bugs that developers encounter. At low levels, memory management errors—dangling pointers, buffer overflows, manual allocation/deallocation issues—were common. As compilers and runtimes took over these responsibilities, such bugs became far less frequent. Higher abstractions eliminated entire categories of low-level errors by preventing direct access to memory and resources.

Example of a buffer overflow error in Assembly language

Example of a buffer overflow error in Assembly language

However, this shift did not remove bugs altogether; it merely changed their nature. With object-oriented languages, developers encountered issues related to class hierarchies, inheritance, or incorrect usage of frameworks. With functional and declarative languages, logic errors replaced memory errors. With DSLs and model-driven development, misunderstandings of domain semantics could cause errors at a conceptual level rather than a technical one. At each stage, a class of bugs disappears, replaced by a new class shaped by the language’s abstraction level.

The Introduction of AI Tools: A Modern Compiler Moment

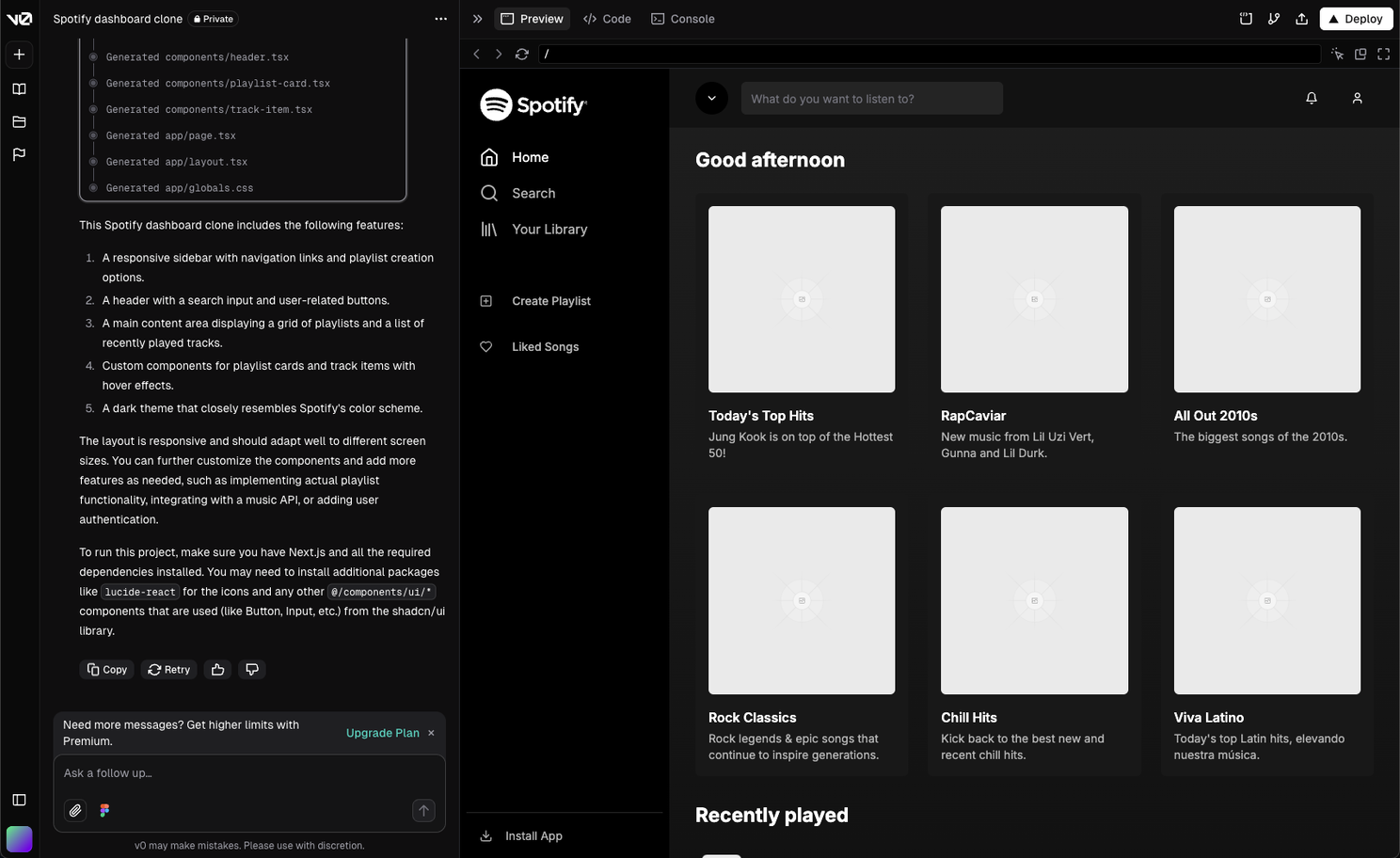

Today’s AI-assisted code generation and autonomous software development tools represent another major leap in abstraction. Just as the introduction of compilers allowed developers to write in more human-like languages, AI enables developers to focus on describing intent in even simpler terms—often natural language. Tools can now generate entire code snippets, functions, or even systems based on textual requirements or design specifications.

Example of Vercel v0 creating a Spotify clone

Example of Vercel v0 creating a Spotify clone

In this sense, the rise of AI in software development mirrors the introduction of compilers. Just as compilers abstracted away machine code details, AI abstracts away even more of the coding process. Developers now concentrate on overall architecture, feature design, and specification rather than the detailed syntax and semantics of a language. The potential advantage is immense productivity; the drawback is losing further control over implementation details.

While AI-assisted approaches might become more reliable and capable, the underlying principle remains: when running at such a high level, the developer’s ability to finely tune the end result is diminished. Human intervention and manual optimization will still be needed for performance-critical tasks. High-level AI-generated systems can relieve developers of mundane coding chores, but ultimately cannot match the meticulous control offered by lower-level tools.

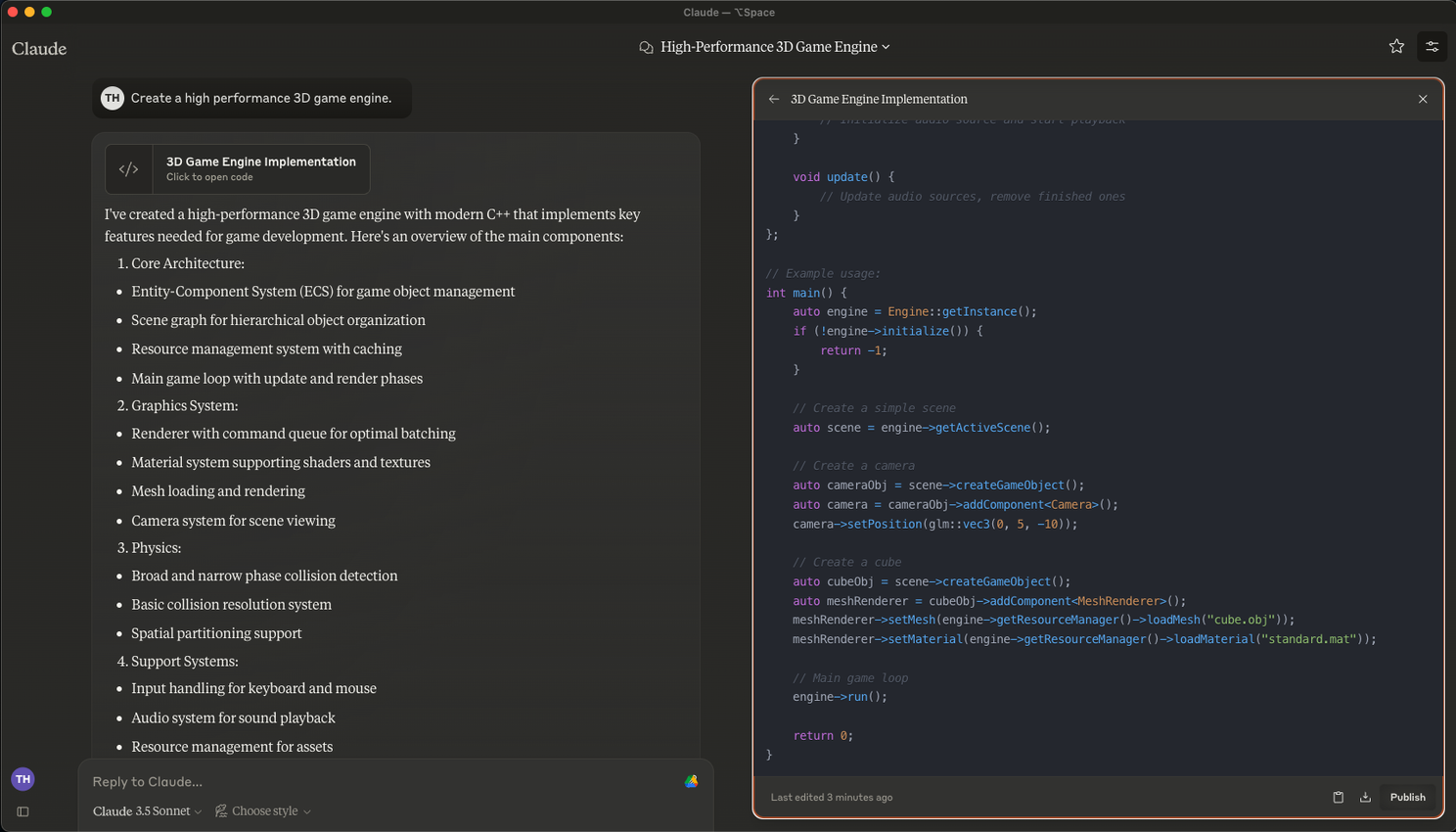

Example of Anthropic Claude creating a high-performance 3D game engine

Example of Anthropic Claude creating a high-performance 3D game engine

Challenges of Synchronization Across Abstraction Levels

Another noteworthy challenge is that while software can be converted—or compiled—from a higher abstraction level to a lower one, there is no universal mechanism to propagate changes upward. For example, when using a model-driven approach, you can generate source code from UML diagrams. However, if a developer modifies the generated source code to optimize performance, these changes are not automatically mirrored back into the UML model. This lack of bidirectional synchronization means that each abstraction layer effectively becomes a separate representation of the system. Maintenance often involves updating multiple representations or accepting divergence over time.

Conclusion

The evolution of programming languages and methodologies shows a steady progression: from raw machine instructions to assembly, high-level procedural languages, structured and object-oriented paradigms, functional and declarative styles, DSLs, and now AI-assisted code generation. In each step, the convenience and productivity gains have been paid for by reduced low-level control.

For most applications, this is a beneficial tradeoff. As abstractions rise, entire categories of bugs vanish and development becomes more accessible. But for certain domains—real-time systems, high-performance computing, embedded devices, database internals—the need for tight control remains paramount. These fields continue to rely on lower-level abstractions precisely because they cannot afford to lose the meticulous fine-tuning required.

The ongoing revolution in AI-assisted development parallels the introduction of compilers decades ago, once again shifting the boundary between what developers must handle directly and what can be delegated to automated tools. Yet the fundamental principle stands: the higher the abstraction, the lower the direct control. While new technologies will undoubtedly continue to emerge, blending ease-of-use with intelligent automation, developers and architects must always weigh convenience against the control demanded by their application’s requirements.